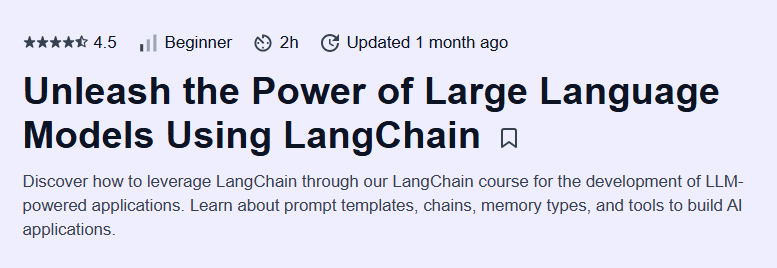

Unleash the Power of Large Language Models Using LangChain Course

A concise, hands-on LangChain masterclass that equips you to prototype and deploy powerful LLM workflows in just two hours.

What will you learn in Unleash the Power of Large Language Models Using LangChain Course

Understand the core concepts of language models and the architecture of LangChain

Craft and manage prompt templates, parse LLM outputs, and handle message formats

Integrate external tools and services into LangChain workflows for extended functionality

Generate and work with embeddings, store and query vectors in vector databases

Program Overview

Module 1: Introduction to LangChain

⏳ 25 minutes

Topics: What Is a Language Model?; What Is LangChain and Why Does It Matter?; Use Cases of LangChain

Hands-on: Complete the initial interactive lessons to grasp core LangChain components and real-world integration scenarios

Module 2: Exploring LangChain

⏳ 45 minutes

Topics: Chat Models, Messages, and Prompt Templates; Parsing Outputs; Runnables & Expression Language; Tools; Embeddings & Vector Stores

Hands-on: Build and test simple chains—craft prompts, parse outputs, invoke tools, and retrieve embeddings from vector stores

Module 3: LangGraph Basics

⏳ 45 minutes

Topics: What Is LangGraph?; Main Components of LangGraph; Why Traditional Chains Fall Short; How to Create a Routing System; LangGraph Quiz

Hands-on: Configure and evaluate a router chain to orchestrate multi-agent workflows dynamically

Module 4: Wrapping Up

⏳ 10 minutes

Topics: Integrating LangChain with LLMs, dynamic agents, and future possibilities

Hands-on: Finalize the course with a practical wrap-up and explore the “Query CSV Files with Natural Language Using LangChain and Panel” project

Get certificate

Job Outlook

The average Artificial Intelligence Engineer salary in the U.S. is $106,386 per year as of June 2025

Employment of software developers, quality assurance analysts, and testers is projected to grow 17% from 2023 to 2033

Proficiency with LLM frameworks and prompt engineering drives roles like AI Engineer, Machine Learning Engineer, and AI Consultant

LangChain expertise is increasingly sought after for building chatbots, retrieval-augmented generation systems, and custom LLM services

- Fully interactive, in-browser coding environment eliminates setup overhead

- Clear progression from basic chains to complex multi-agent workflows

- Real-world project example (“Query CSV Files with Natural Language”) reinforces learning

- Text-based format may not suit learners who prefer video instruction

- Limited depth on deployment and scaling best practices outside of core LangChain APIs

Specification: Unleash the Power of Large Language Models Using LangChain Course

|

FAQs

- LangChain can orchestrate workflows with traditional ML models alongside LLMs.

- Supports API calls to AI services for classification, vision, or speech tasks.

- Custom modules can extend LangChain to specialized AI pipelines.

- Enables hybrid systems combining LLM reasoning with external analytics.

- Useful for enterprise projects needing multi-model integration.

- Chains can be configured to process continuous data streams via async calls.

- Vector stores can update dynamically to support real-time retrieval.

- Tools and agents can react immediately to incoming data.

- Integrates with messaging queues like Kafka or RabbitMQ.

- Enables chatbots and monitoring systems to respond in near real-time.

- LangChain chains and agents can be containerized using Docker.

- Orchestrated workflows can run on cloud platforms like AWS, Azure, or GCP.

- Vector databases like Pinecone or Weaviate support high-volume queries.

- Monitoring and logging frameworks ensure reliability and performance.

- Scalable architectures allow multi-agent coordination in production environments.

- LangGraph enables routing between different chains and LLM agents.

- Helps divide large tasks into specialized agent workflows.

- Allows dynamic selection of models based on task requirements.

- Simplifies orchestration for tasks like document summarization or multi-step reasoning.

- Reduces complexity compared to manually chaining multiple agents.

- AI Engineer or Machine Learning Engineer building LLM applications.

- Prompt Engineer designing efficient workflows for LLM outputs.

- AI Consultant advising enterprises on retrieval-augmented generation systems.

- Chatbot Developer for customer service and automation solutions.

- Technical Trainer or content creator specializing in LangChain and LLM frameworks.